Immich Database Backups

Recently, I wrote about the technology I’ve been using.

Today I want to talk a little about how I’m backing up my immich database.

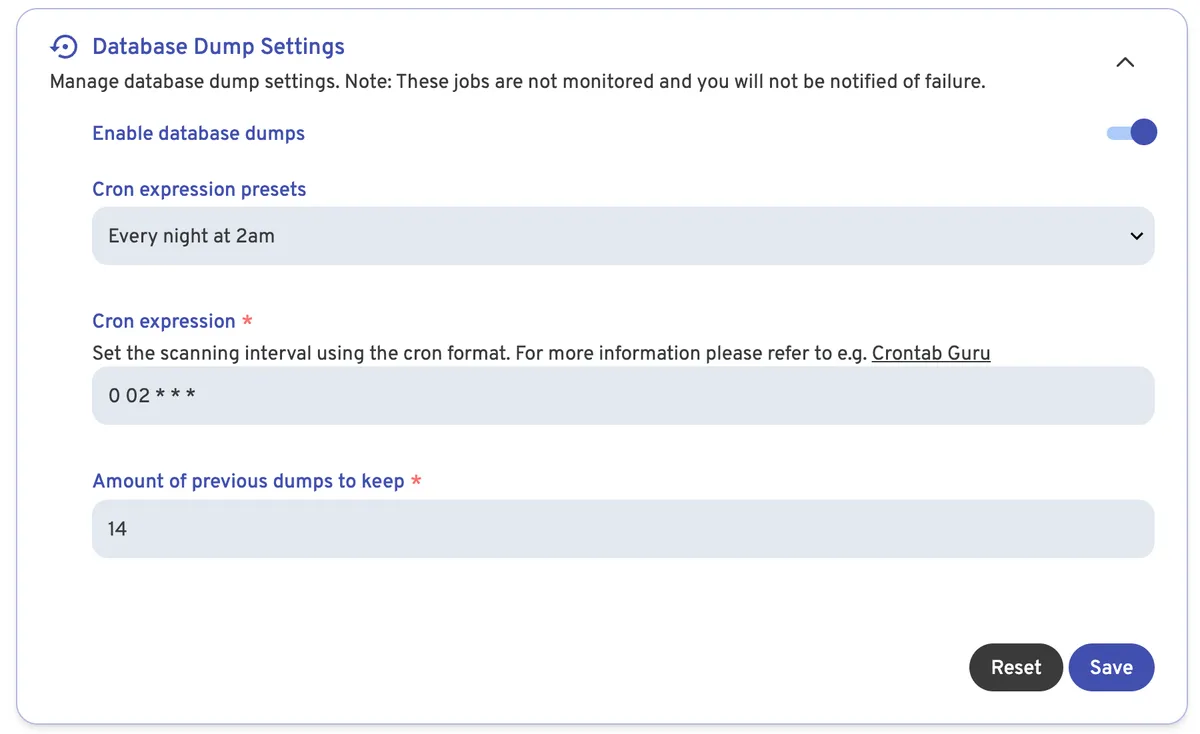

Immich has a setting that will allow you to create an automatic database dump that’s pretty easy to find within the settings menu:

You can see from the screenshot that I’m creating a database dump every night at 2am, I then retain that dump for 14 days.

It’s nice that immich includes this handy little feature, but since I don’t know much of anything about PostgreSQL databases, I’m not sure how valuable these dumps really are... for me anyway.

I’d have to know how to import those dumps into Postgres which is running as part of a docker compose stack on my Unraid server, and who has time to learn about that stuff? If I wanted to do that I’d be DBA and that would a miserable existence indeed.

It sucks to suck

I ran into an issue over the last week while I was converting my Unraid array over to a ZFS pool where I couldn’t get the immich docker stack running after moving some volumes around.

I didn’t capture the exact output of the container logs but essentially it was complaining that a database already existed but it couldn’t start it up.

Now, someone with more patience and smarts than me probably could’ve found an elegant solution to this problem by taking some time to understand how docker works and how that then relates to a containerized PostgreSQL DB.

That person would also probably eventually figure out how to tell Postgres to import these dumps. And I bet they also enjoy gross things like organic food and fancy coffee. Yuck

I’m neither patient nor smart.

I had a copy of my immich database directory on another drive in the NAS. I made that copy just after running docker-compose down on my immich containers so it had all the latest information in it.

Instead of learning how docker works, and then learning how PostgreSQL works, I did an rsync from my backup directory into the docker volume that holds my database files.

Once that was done docker-compose up worked like a charm and all my photos, metadata, and settings for immich were recovered!

Yay! Go me!

This is a very sysadmin way of doing things. I don’t like databases, but I do like files.

Given the option to interact with a database vs just dropping some files in place, I’ll choose dropping files in place every time.

Which lead me to an epiphany about how to keep the database backed up in the easiest possible way I could imagine.

File System Snapshots

I should take a small step back and describe the running environment a little bit. Because it greatly affects how my backup strategy for immich works. It might mean that it won’t work for you. In which case I’m sorry you like to things the wrong way.

My Setup

My NAS has two main storage pools.

The first is a single 2TB NVME drive that is formatted with BTRFS.

I chose BTRFS because it can create nearly instantaneous file system snapshots, and doesn’t require additional resources from memory the way ZFS does. Also, being a single disk I don’t have to worry about any issues with BTRFS raid.

The second pool consists of 4, 6TB spinning disks. These are configured in ZFS raidz1 - which is basically RAID5 but better because it doesn’t suffer from the write hole.

In this case ZFS was chosen for two reasons.

- Snapshots

- raidz1 allows me to use all 4 of my disks without losing half the space to mirroring.

- BTRFS lacking official support for RAID 5 made ZFS the winner here.

Storage Pool Layout

| ftldrive | zpool |

|---|---|

| NVME | spinning rust |

| 2TB | 18TB |

| BTRFS | ZFS |

I have the immich database running on my fast NVME storage - aptly named “ftldrive”.

Since ftldrive is formatted as BTRFS, I can utilize filesystem snapshots to get a frozen in time copy of the files on that subvolume which I can then copy to my zpool for safe keeping.

The caveat

The one thing standing in the way of this awesome solution is that the database can’t be running when the snapshot occurs.

Databases, including PostgreSQL are constantly writing to the files I want to capture.

Because of this, I can’t just take the snapshot whenever I want and expect the files on disk at that moment to be in good working order.

But why?

This is because of a concept called Write-Ahead Logging (WAL) in database speak.

A high level view that DBA’s will probably have a problem with (because what don’t they have a problem with?) is this:

When a database is performing a transaction it has to log those transactions before they are written to disk. So, if a transaction is occurring at the same time as the snapshot is taken, then there is a good chance that the WAL record will be different from what’s been written to disk.

In short this means, the whole thing is now fucked up.

The solution for me is to stop the containers and then take the snapshot.

How the sausage gets made

I needed a script that would do the following in this exact order:

docker-compose down- Create a BTRFS snapshot of the subvolume

/mnt/ftldrive/immich-database docker-compose uprsyncthe contents of the snapshot to my zpool for safe keeping.- Remove the snapshot - no reason to keep it hanging around.

The order here is important because I want to reduce the downtime of the immich stack as much as possible.

I only need the database to be down long enough for a snapshot to be created. Once that’s done, I’m free to start immich back up and can copy the contents of the snapshot while the service is up and running.

In my testing over the last few days the downtime is something like 5 seconds, which for my use case is damn near no downtime at all.

I’m a vibe coder now

I pumped these instruction into Sonnet 3.7 and asked it to spit me out a bash script that would accomplish this work and this is the result:

Awesome immich db backup script

I ran this script today so I could give you some sample output from the log it creates.

2025-05-10 15:02:20 - Starting Immich backup process

2025-05-10 15:02:20 - Stopping Docker containers

2025-05-10 15:02:22 - Creating BTRFS snapshot of /mnt/ftldrive/immich-database

2025-05-10 15:02:22 - Starting Docker containers

2025-05-10 15:02:25 - Backing up data using rsync

2025-05-10 15:02:27 - Removing snapshot

2025-05-10 15:02:27 - Backup completed successfully!

You can see the total time here is pretty fast in general but the 4 timestamps in the middle show just how fast this is.

2025-05-10 15:02:20 - Stopping Docker containers

2025-05-10 15:02:22 - Creating BTRFS snapshot of /mnt/ftldrive/immich-database

2025-05-10 15:02:22 - Starting Docker containers

2025-05-10 15:02:25 - Backing up data using rsync

The immich service went down at 15:02:20 and was back up by 15:02:25.

That’s 5 seconds, pretty much just the time it takes to restart the service.

I don’t need to leave services down while I do the rsync since I’m not performing that operation on live data which means there’s no chance of file corruption.

Working well for me

I know this is probably not the traditional way to do a database backup. But given the architecture of my NAS file systems and the fact that I can go without this service for 5 seconds a day, I think it’s a good way to ensure I can always roll back without having to also become a DBA on the side.